This post was originally written for my ‘Professional Issues in the Workplace’ module on the Software Engineering Graduate Apprenticeship course.

Responsible AI

Artificial Intelligence (AI) has the potential to greatly benefit society and be utilised to improve processes across various sectors and industries. Businesses can use AI to discover customer insights through data, automate repetitive tasks to improve efficiency, and improve customer service, to name just a few use cases.

On the other hand, AI has the potential to cause real harm. Refraining from any pop culture references, without proper oversight and AI can result in negative outcomes for people and society as a whole.

Artificial Intelligence has undergone a significant evolution since its early days as a research field on creating machines that can think. Even the early applications of AI, such as Expert Systems, designed to assist in medical diagnoses, and computers that could defeat chess world champions, seem small-scale in comparison to the current capabilities and use cases of AI.

As this technology is used in more scenarios, and investment is set to increase over the coming years, it is vital that responsible AI design and ethical considerations are an integral part of the software development process.

Microsoft’s Responsible AI Principles

Many large technology companies actively involved in AI research and development have established principles and policies to guide their engineering teams as they design and build these systems. In this post I will focus on the Responsible AI principles followed at Microsoft. These are six key principles used in the company’s development of AI, which are underpinned by ethics and the ability to explain the models used in training.

The six ‘Responsible AI’ principles at Microsoft are Fairness, Reliability & Safety, Privacy & Security, Inclusiveness, Transparency, and Accountability.

Fairness

Fairness is about ensuring that people are not disadvantaged by decisions made by AI systems. Developers of AI must take great care that these systems do not introduce, or increase biases that exists within society. Algorithmic bias in existing AI systems have been well-documented, and more needs to be done in gathering diverse data sets used for training systems, or considering if such systems should be deployed in the first case.

Reliability & Safety

AI systems should be reliable and operate as they were intended to. This involves having a rigorous testing process, with safeguards in place to detect and prevent errors. Car-manufacturer, Tesla, has been at the centre of notable issues with self-driving systems, resulting in their vehicles causing accidents. Despite Tesla’s impressive advances in autonomous vehicle technology, in my opinion this is an example of an AI system that does not meet the incredibly high standards that are required in higher-risk activities such as driving.

Privacy & Security

AI systems need to be properly protected. This includes the data sets used to train the AI models, and the devices which run the systems. Proper care needs to be taken to prevent personal data being leaked to other systems or users.

Inclusiveness

In a similar vein to the Fairness principle, AI systems should be designed with inclusiveness in mind. No one should be excluded as a result of the system, which includes underrepresented groups. A key aspect of fostering inclusive design is to have a diverse range of people involved throughout the development process.

Transparency

Developers and companies using AI tools need to be able to properly explain how their system works, and the factors involved in their actions or outcomes. This is also a key aspect of considering how systems may introduce or exaggerate bias in outcomes.

Accountability

There needs to be someone, or an entity held accountable for an AI system. A company developing AI should not be able to absolve itself from blame in the event of a negative outcome. This also means that a person has done all they can that proper testing has been carried out prior to releasing a system. Also, having someone responsible being able to prevent a release if not up to a required standard.

Can laws keep up with AI developments?

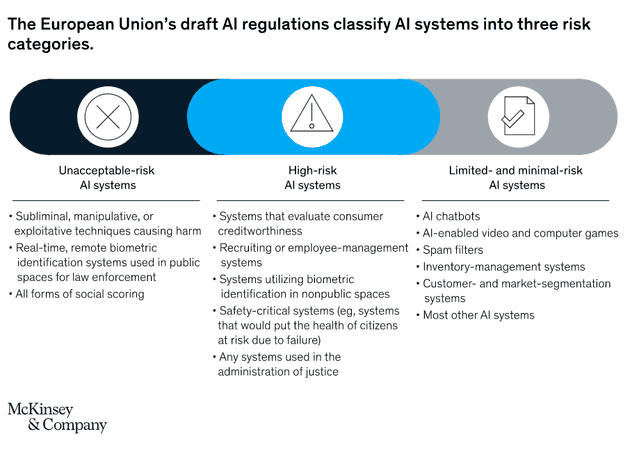

While companies can implement guidance and ethical principles for developing AI systems, there needs to be some form of regulation and laws which protect against improper use of the technology. Advances in technology have frequently outpaced the legislation drafted to govern their use. The image below shows the European Union’s draft regulations with a classification of AI systems into three risk categories – ‘Unacceptable-risk’, ‘High-risk’ and ‘Limited- and minimal-risk’ systems.

Although these draft proposals cover many of the problems and uses of AI today, the rapid evolution of the technology means that current regulations can easily become outdated in a matter of years. Future use cases and technology may not be adequately covered by legislation, which is why companies have the moral duty to not only comply with current law, but have strong ethical and responsible AI policy and governance models.

Summary

Artificial Intelligence, as a technology, has already demonstrated huge potential in improving lives and society. However, like any tool and technology, it has the risk of being used for malicious or unintended negative outcomes. It is a tool that is neither good nor evil. Designers and developers have an important responsibility to ensure that the AI being created is built with the best intentions. Responsible AI principles such as those used by Microsoft are a step towards informing designs that reduce harm.